Problem

In data warehousing applications during ETL (Extraction, Transformation and Loading) or even in OLTP (On Line Transaction Processing) applications we are often encountered with duplicate records in our table. To make the table data consistent and accurate we need to get rid of these duplicate records keeping only one of them in the table. In this tip I discuss different strategies which you can take for this, along with the pros and cons.Solution

There are different methods for deleting duplicate (de-duplication) records from a table, each of them has its own pros and cons. I am going to discuss these methods, prerequisite of each of these methods along with its pros and cons.

- Using correlated subquery

- Using temporary table

- Creating new table with distinct records and renaming it..

- Using Common Table Expression (CTE)

- Using Fuzzy Group Transformation in SSIS

- Using MERGE Statement

1. Using correlated subquery

If you already have a identity column on your table, your work is half done. You can use a correlated subquery to get rid of the duplicates.

First let me briefly tell you how a correlated subquery works. In a correlated subquery, first outer query is evaluated, the result from the outer query is used by an inner sub query for its evaluation, whatever the outcome of the inner sub-query is again used by the outer query to get the final resultset. To learn more about correlated subqueries, you can click here.

In the example below, for the data deletion I am joining the inner query columns with the outer query to find the record with the maximum ID (you can even use minimum also and change the predicate to ">" from "<"). Then I am deleting all the records which has an ID less than what we have got from the inner query.

Please note, this approach can be taken only if you have identity column on the target table or you are willing to alter your target table to add an identity column which would require ALTER TABLE permission.

Script #1 - De-duplication with correlated subquery |

CREATE TABLE Employee

(

[ID] INT IDENTITY,

[FirstName] Varchar(100),

[LastName] Varchar(100),

[Address] Varchar(100),

)

GO

INSERT INTO Employee([FirstName], [LastName], [Address])

VALUES ('Linda', 'Mitchel', 'America')

INSERT INTO Employee([FirstName], [LastName], [Address])

VALUES ('Linda', 'Mitchel', 'America')

INSERT INTO Employee([FirstName], [LastName], [Address])

VALUES ('John', 'Albert', 'Australia')

INSERT INTO Employee([FirstName], [LastName], [Address])

VALUES ('John', 'Albert', 'Australia')

INSERT INTO Employee([FirstName], [LastName], [Address])

VALUES ('John', 'Albert', 'Australia')

INSERT INTO Employee([FirstName], [LastName], [Address])

VALUES ('Arshad', 'Ali', 'India')

INSERT INTO Employee([FirstName], [LastName], [Address])

VALUES ('Arshad', 'Ali', 'India')

INSERT INTO Employee([FirstName], [LastName], [Address])

VALUES ('Arshad', 'Ali', 'India')

INSERT INTO Employee([FirstName], [LastName], [Address])

VALUES ('Arshad', 'Ali', 'India')

GO

SELECT * FROM Employee

GO

--Selecting distinct records

SELECT * FROM Employee E1

WHERE E1.ID = ( SELECT MAX(ID) FROM Employee E2

WHERE E2.FirstName = E1.FirstName AND E1.LastName = E2.LastName

AND E1.Address = E2.Address)

GO

--Deleting duplicates

DELETE Employee

WHERE ID < ( SELECT MAX(ID) FROM Employee E2

WHERE E2.FirstName = Employee.FirstName AND E2.LastName =Employee.LastName

AND E2.Address = Employee.Address)

GO

SELECT * FROM Employee

GO |

2. Using temporary table

In this approach we pull distinct records from the target table into a temporary table, then truncate the target table and finally insert the records from the temporary table back to the target table as you can see in Script #3.

Three things you need to be aware of when you are using this approach.

First you need to make sure you have or set enough size for tempdb database to hold all the distinct records especially if it is very large result-set.

Second you need to make sure you perform this operation in a transaction, at least the TRUNCATE and INSERT parts so that you are not left with an another problem if it fails in between for any reason.

Third you need to have the required permissions for object creation/truncation.

Script #2, creates a table and inserts some records along with some duplicate records which we will be using in all further examples.

Script #2 - Creating a table with duplicate records |

CREATE TABLE Employee

(

[FirstName] Varchar(100),

[LastName] Varchar(100),

[Address] Varchar(100),

)

GO

INSERT INTO Employee([FirstName], [LastName], [Address])

VALUES ('Linda', 'Mitchel', 'America')

INSERT INTO Employee([FirstName], [LastName], [Address])

VALUES ('Linda', 'Mitchel', 'America')

INSERT INTO Employee([FirstName], [LastName], [Address])

VALUES ('John', 'Albert', 'Australia')

INSERT INTO Employee([FirstName], [LastName], [Address])

VALUES ('John', 'Albert', 'Australia')

INSERT INTO Employee([FirstName], [LastName], [Address])

VALUES ('John', 'Albert', 'Australia')

INSERT INTO Employee([FirstName], [LastName], [Address])

VALUES ('Arshad', 'Ali', 'India')

INSERT INTO Employee([FirstName], [LastName], [Address])

VALUES ('Arshad', 'Ali', 'India')

INSERT INTO Employee([FirstName], [LastName], [Address])

VALUES ('Arshad', 'Ali', 'India')

INSERT INTO Employee([FirstName], [LastName], [Address])

VALUES ('Arshad', 'Ali', 'India')

GO

SELECT * FROM Employee

GO |

Script #3 - Using temporary table |

BEGIN TRAN

-- Pull distinct records in the temporary table

SELECT DISTINCT * INTO #Employee

FROM Employee

--Truncate the target table

TRUNCATE TABLE Employee

--Insert the distinct records from temporary table

--back to target table

INSERT INTO Employee SELECT * FROM #Employee

--Drop the temporary table

IF OBJECT_ID('tempdb..#Employee') IS NOT NULL

DROP TABLE #Employee

COMMIT TRAN

GO

SELECT * FROM Employee

GO |

3. Creating new table with distinct records and renaming it

In this approach we create a new table with all distinct records, drop the existing target table and rename the newly created table with the original target table name. Please note, with this approach the meta-data about the target table will change for example object id, object creation date etc. so if you have any dependencies on these you have to take them into consideration.

Three things you need to aware of when you are using this approach.

First you need to make sure you have enough space in your database in the default filgroup (if you want your new table to be on some other file group than the default filegroup then you need to create a table first and then use INSERT INTO....SELECT * FROM) to hold all the distinct records especially if it is very large result-set.

Second you need to make sure you perform this operation in a transaction, at least the DROP and RENAME part so that you are not left with an another problem if it fails in between for any reason.

Third you need to have required permissions for object creation/drop.

Script #4 - New table with distinct only |

BEGIN TRAN

-- Pull distinct records in a new table

SELECT DISTINCT * INTO EmployeeNew

FROM Employee

--Drop the old target table

DROP TABLE Employee

--rename the new table

EXEC sp_rename 'EmployeeNew', 'Employee'

COMMIT TRAN

GO

SELECT * FROM Employee

GO |

4. Using Common Table Expression (CTE)

SQL Server 2005 introduced Common Table Expression (CTE) which acts as a temporary result set that is defined within the execution scope of a single SELECT, INSERT, UPDATE, DELETE, or CREATE VIEW statement.

In this example I am using a CTE for de-duplication. I am using the ROW_NUMBER function to return the sequential number of each row within a partition of a result set which is a grouping based on [FirstName], [LastName], [Address] columns (or columns of the table) and then I am deleting all records except where the sequential number is 1. This means keeping one record from the group and deleting all other similar/duplicate records. This is one of the efficient methods to delete records and I would suggest using this if you have SQL Server 2005 or 2008.

Script #5 - Using CTE for de-duplication |

--example 1

WITH CTE AS

(

SELECT ROW_NUMBER() OVER

(PARTITION BY [FirstName], [LastName], [Address]

Order BY [FirstName] DESC, [LastName] DESC, [Address] DESC )

AS RowNumber

FROM Employee tbl

WHERE EXISTS (SELECT TOP 1 1 FROM (SELECT FirstName,LastName,Address

FROM Employee

GROUP BY [FirstName], [LastName], [Address] HAVING COUNT(*) > 1 )GrpTable

WHERE GrpTable.FirstName = tbl.FirstName AND

GrpTable.LastName = tbl.LastName AND GrpTable.Address = tbl.Address)

)

DELETE FROM CTE Where RowNumber > 1

GO

SELECT * FROM Employee

GO --A more simplified and faster example

WITH CTE AS

(

SELECT ROW_NUMBER() OVER

(PARTITION BY [FirstName], [LastName], [Address]

Order BY [FirstName] DESC, [LastName] DESC, [Address] DESC )

AS RowNumber,

[FirstName], [LastName], [Address]

FROM Employee tbl )

DELETE FROM CTE Where RowNumber > 1

GO

SELECT * FROM Employee

GO |

5. Using Fuzzy Group Transformation in SSIS

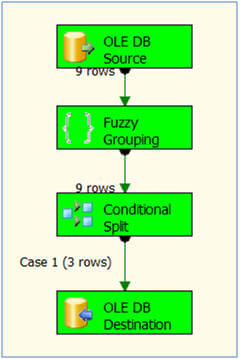

If you are using SSIS to upload data to your target table, you can use a Fuzzy Grouping Transformation before inserting records to the destination table to ignore duplicate records and insert only unique records. Here, in the image below, you can see 9 records are coming from source, but only 3 records are being inserted into the target table, that's because only 3 records are unique out of the 9 records. Refer to Script #2 above to see more about these 9 records that were used.

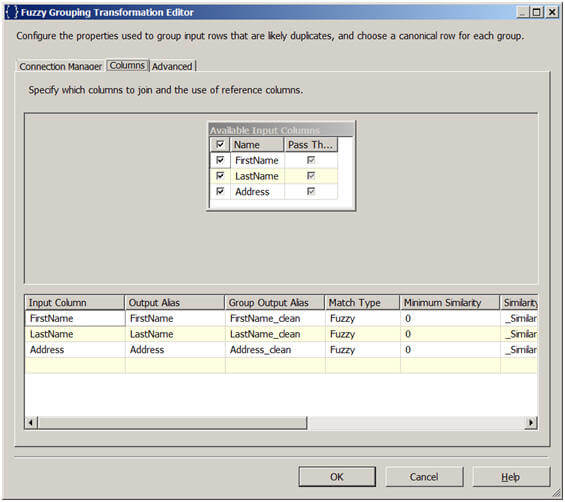

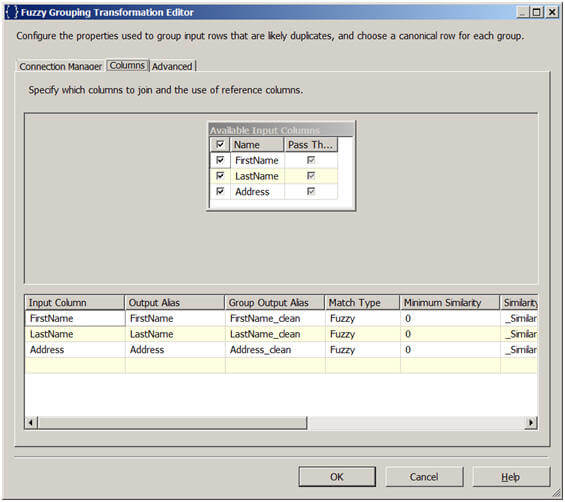

In the Fuzzy Grouping Transformation editor, on the Columns tab you specify the columns which you want to be included in grouping. As you can see in the below image I have chosen all 3 columns in my consideration for grouping.

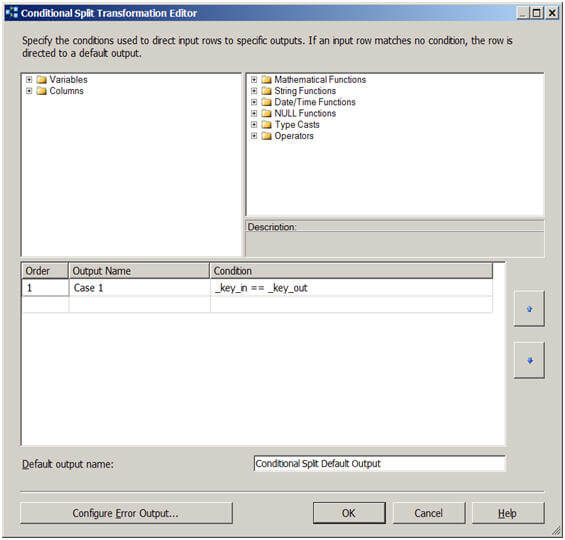

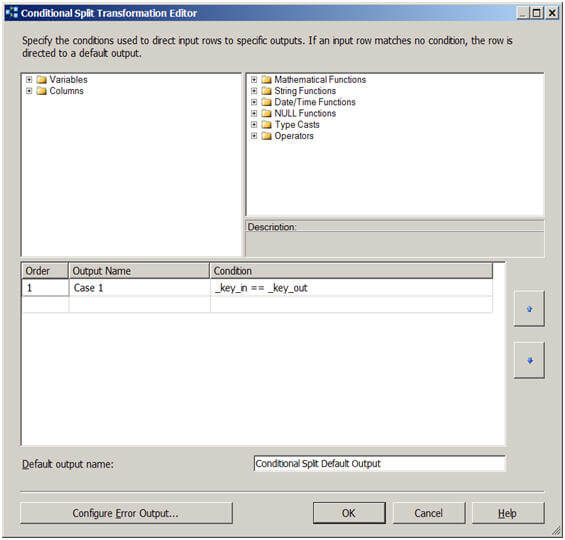

In the Fuzzy Grouping Transformation, you might add a conditional split to direct unique rows or duplicate rows to two destinations. Here in the example you can see I am routing all the unique rows to the destination table and ignoring the duplicate records. The Fuzzy Grouping Transformation produces a few additional columns like_key_in which uniquely identifies each rows, _key_out which identifies a group of duplicate records etc.

6. Using MERGE Statement

Beginning with SQL Server 2008, now you can use MERGE SQL command to perform INSERT/UPDATE/DELETE operations in a single statement. This new command is similar to the UPSERT (fusion of the words UPDATE and INSERT.) command of Oracle. It inserts rows that don’t exist and updates the rows that do exist. With the introduction of the MERGE SQL command, developers can more effectively handle common data warehousing scenarios, like checking whether a row exists and then executing an insert or update or delete.

The MERGE statement basically merges data from a source result set to a target table based on a condition that you specify and if the data from the source already exists in the target or not. The new SQL command combines the sequence of conditional INSERT, UPDATE and DELETE commands in a single atomic statement, depending on the existence of a record. With this you can make sure no duplicate records are being inserted into the target table, but rather updated if there is any change and only new records are inserted which do not already exist in the target. For more information about this you can click here.

Ref: http://www.mssqltips.com/tip.asp?tip=1918